A lot of webmasters probably experienced a bit of a panic over the past day or so when they opened Search Console (or their e-mail clients) and discovered a message looking similar to the one below:

- Googlebot cannot access CSS and JS files on http://[your URL here]/

Step One: Don’t panic!!

Chances are that your robots.txt file is blocking Google’s crawlers from accessing CSS and JS files which are being used to render content on any given page. Where WordPress is concerned, blocking crawlers from some directories was pretty much standard practice for most websites, because common wisdom said you did NOT want those directories crawled. So your robots.txt probably looks somewhat similar to the below:

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-includes/

Disallow: /wp-content/

The thing is, Google wants to see those files, since instead of just fetching page HTML it renders pages in their entirety in order to get an actual representation of how any given page actually appears in a user’s browser – and this has been the case since last year.

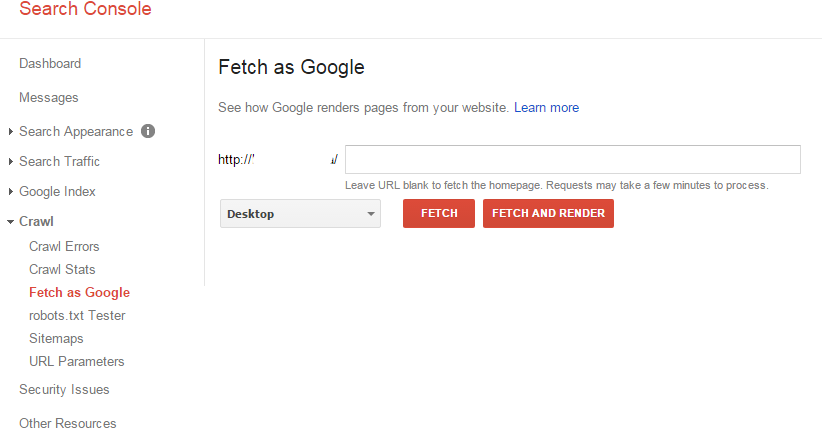

You can test this for yourself by logging into Search Console, and going to the Fetch as Google page, and clicking on Fetch and Render.

After a minute or two it should provide you with a result on which resources you may inadvertently be hiding from the crawlers, and will provide you with a list of the blocked resources.

If you do a search you’ll see a fair number of articles about this – although this seems to have rolled out last year Google is only now sending out notifications to webmasters who are using Search Console.

You can find a fairly active discussion about this on the WordPress Support Forums, and there are a number of solutions worth trying. Below is an example, but keep in mind that this applies to WordPress websites specifically, and you’ll have to tweak and modify it a bit based on which CMS you’re using.

User-Agent: *

Disallow: /wp-*

User-Agent: Googlebot

Allow: /*.js*

Allow: /*.css*

The above will block all crawlers from the wp-admin, wp-content, and wp-includes directories, but still allow Google to crawl the JS and CSS files contained in them. Although Yoast argues that there is no real reason to block crawlers from those directories in any case. Some may still feel uncomfortable about opening up their /wp-admin/ folders to crawling though, so you may still want to leave the above code example as is – I’ll leave the decision up to you. But keeping it in place may mean still receiving the warning.

As a reference, this is what our current robots.txt looks like:

User-agent: *

Disallow: /wp-admin/

Disallow: /forum/

Disallow: /plugin/maps/

Disallow: /plugin/slider/

Disallow: /register/

Disallow: /forums/

Disallow: /general-support/

User-Agent: Googlebot

Allow: /*.js*

Allow: /*.css*

So, as you can see, there’s a lot of variation based on your exact setup already. Go through your warnings – if you have any – and let us know how you went about fixing them on Facebook or Google+.